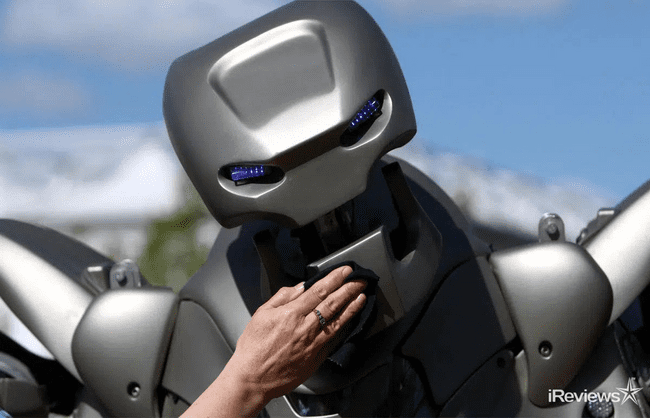

This Robot Can Follow Spoken Instructions

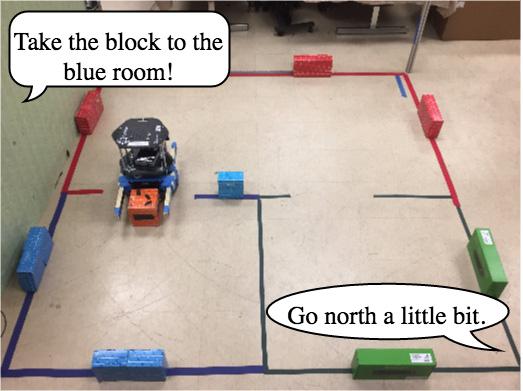

Researchers unveiled a robot that can follow human instructions at the 2017 Robotics: Science and Systems conference in Boston, Massachusetts. Three Brown University students and postdoctoral researcher Lawson L.S. Wong created a robot that understands humans’ natural language commands.

The robot began as an undergraduate project by Dilip Arumugam and Siddharth Karamcheti. Now, Arumugam is a graduate student at Brown. The third creator, also a graduate student, is Nakul Gopalan; all four of the visionaries work in Stefanie Tellex’s lab. Tellex herself is a computer science professor at Brown who specializes in human-robot collaboration.

Autonomous Robot

“We ultimately want to see robots that are helpful partners in our homes and workplaces.”

– Stefanie Tellex

The innovative research makes robots better at following spoken instructions, regardless of if those instructions are more abstract or very specific. Arumugam says, “The issue we’re addressing is language grounding, which means having a robot take natural language commands and generate behaviors that successfully complete a task. The problem is that commands can have different levels of abstraction, and that can cause a robot to plan its actions inefficiently or fail to complete the task at all.”

Imagine a situation where a human is working in a warehouse with a robotic forklift. The human command “Grab that pellet” is more abstract because it implies other sub-tasks associated with the command. The robot must line up the lift, put the forks below the pellet and lift it. Conversely, the human command “Tilt the forks back a little” is more specific and involves only one action.

Most of today’s robots get confused between the more abstract command and the more specific command from the above example. Those robots try to find cues in the command and its sentence structure before inferring a desired action. The inference then jump-starts a planning algorithm to solve the command. However, without taking into account whether the instructions are specific or abstract, the robot is liable to under- or over-plan for the command. This results in delays or lags before and when the robot takes action.

Creating A Model

This new system adds more sophistication to the robot’s actions than existing models. In addition to inferring a solution to the command from the language, the new model analyzes the language and determines the level of abstraction.

“That allows us to couple our task inference as well as our inferred specificity level with a hierarchical planner,” Arumugam says, “so we can plan at any level of abstraction. In turn, we can get dramatic speed-ups in performance when executing tasks compared to existing systems.”

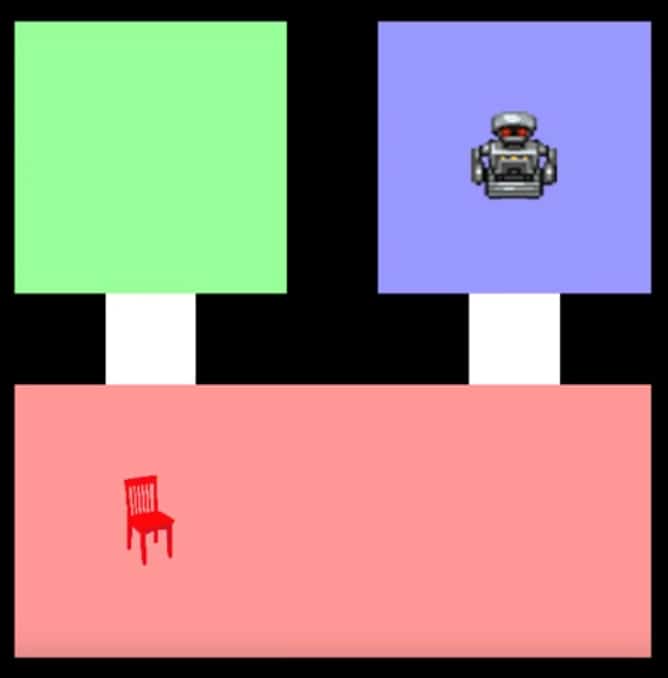

To boost the robot’s system, the Providence, Rhode Island-based researchers used Amazon’s crowd-sourcing marketplace, Mechanical Turk. Mechanical Turk volunteers watched the robot perform a task in a virtual task domain called Cleanup World. The online world had a few color-coded rooms, a robot, and an object to be manipulated. In this project, that object was a chair that can move between rooms.

The Mechanical Turk volunteers were then asked to say what instructions they would’ve given the robot if they needed it to perform the task they just watched it do. The volunteers gave three types of instructions: abstract, very specific, and something in the middle of those two. For example, a volunteer watches a robot move the chair from the red room to the blue room. While the more abstract instructions may be “Take the chair to the blue room”, the more specific instructions would be “Go three steps north, turn left, take three steps west”.

Testing

The volunteers’ verbal instructions trained the robot to understand the type of language in each level of abstraction. The system eventually learned to infer a solution to the command and pinpoint the abstraction level of the command. Using both of these pieces of data, the system can begin a hierarchical planning algorithm. The algorithm will execute the command using the appropriate time and actions.

The researchers tested their new model in the virtual Cleanup World. And they even tried out their model on an actual, physical Roomba-like robot. Their model shows that when a robot is able to figure out the specificity of the command and the solution to the command, it responds in one second 90% of the time. When the robot does not infer specificity, it takes 20 or more seconds to plan the action for 50% of all tasks.

“This work is a step toward the goal of enabling people to communicate with robots in much the same way that we communicate with each other.”

– Stefanie Tellex

This innovative technology hasn’t been released to the public yet. But if you want a family-friend robot that comes equipped with voice recognition and costs under $1,000, check out Rokid.

Sources: Brown University